dssh: Proof of Concept for a ssh-less, docker-native MPI

Whoo, long time no see (read: blog-post)... :)

But don't you worry guys, I have a nice one this time, I promise.

Even though - CAUTION! - if you have kids in the room, who honour security by not messing around with ssh, be advised that it might be a tough ride...

Recap Swiss Workshop of the HPC Advisory Council

I went to Lugano again to talk about Docker in HPC, as always it was a breeze. Nice talks about D-Wave, FPGA, GPU, Deep Learning and on it goes.

I talked about Linux Containers with an emphasis on the issues we face and how they might be overcome.

At 0:22:30 I start talking about the issues, if you want to flip forward.

sshd-less OpenMPI?

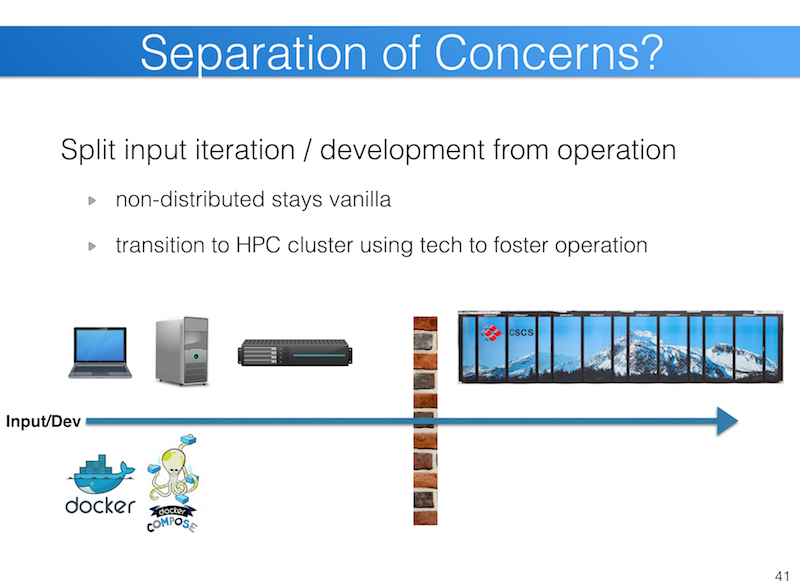

As I pointed out, in my opinion the power of docker today is empowering the developers/end-users/scientist to iterate faster on their task. For developers that is easy, as they have a simple setup.

For HPC users or scientist it's harder because they need a distributed system. As long as they can work on one box it's as easy as developing code.

But once they want to cross over to a multi-host setup they need sshd to login to the remote node and slurmd to register the node in SLURM. One could setup both on the bare-metal host and try fishy things, but it's not getting simpler.

UPDATE: As I realised in the next post I was slightly wrong. The plain mpirun needs sshd/rshd for remote execution; slurm forks the orted daemon using slurmctld.

Proof of Concept

OK, let's see....

I can run the hello world on a remote host with two tasks.

[bob@a7b1e6e98cb1 ~]$ mpirun -n 2 --host 2ec09afc006e /scratch/hello_mpi

Warning: Permanently added '2ec09afc006e,172.17.0.4' (ECDSA) to the list of known hosts.

Process 0 on 2ec09afc006e out of 2

Process 1 on 2ec09afc006e out of 2

[bob@a7b1e6e98cb1 ~]$

By stoping the sshd I am blocked...

[bob@a7b1e6e98cb1 ~]$ mpirun --allow-run-as-root -n 2 --host 2ec09afc006e /scratch/hello_mpi

ssh: connect to host 2ec09afc006e port 22: Connection refused

But how about I use a different command to start the process?

[bob@a7b1e6e98cb1 ~]$ cat /opt/qnib/src/dssh

#!/bin/bash

REMOTE_HOST=$1

shift

set -x

docker -H unix:///var/run/docker.sock exec -i -u ${USER} ${REMOTE_HOST} $@

[bob@a7b1e6e98cb1 ~]$

Then it works again... :)

[bob@a7b1e6e98cb1 ~]$ mpirun -mca plm_rsh_agent /opt/qnib/src/dssh -n 2 --host 2ec09afc006e /scratch/hello_mpi

+ docker -H unix:///var/run/docker.sock exec -i -u bob 2ec09afc006e orted --hnp-topo-sig 0N:1S:4L3:4L2:4L1:4C:4H:x86_64 -mca ess '"env"' -mca orte_ess_jobid '"3033858048"' -mca orte_ess_vpid 1 -mca orte_ess_num_procs '"2"' -mca orte_hnp_uri '"3033858048.0;tcp://172.17.0.6:60882"' --tree-spawn -mca plm_rsh_agent '"/opt/qnib/src/dssh"' -mca plm '"rsh"' --tree-spawn

Process 1 on 2ec09afc006e out of 2

Process 0 on 2ec09afc006e out of 2

[bob@a7b1e6e98cb1 ~]$

It even works with three nodes.

[bob@a7b1e6e98cb1 ~]$ sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

all* up infinite 3 idle 1e52c4457a18,2ec09afc006e,a7b1e6e98cb1

odd up infinite 2 idle 2ec09afc006e,a7b1e6e98cb1

even up infinite 1 idle 1e52c4457a18

[bob@a7b1e6e98cb1 ~]$ mpirun -mca plm_rsh_agent /opt/qnib/src/dssh -n 3 --host 2ec09afc006e,a7b1e6e98cb1,1e52c4457a18 /scratch/hello_mpi

+ docker -H unix:///var/run/docker.sock exec -i -u bob 2ec09afc006e orted --hnp-topo-sig 0N:1S:4L3:4L2:4L1:4C:4H:x86_64 -mca ess '"env"' -mca orte_ess_jobid '"2792751104"' -mca orte_ess_vpid 1 -mca orte_ess_num_procs '"3"' -mca orte_hnp_uri '"2792751104.0;tcp://172.17.0.6:60697"' --tree-spawn -mca plm_rsh_agent '"/opt/qnib/src/dssh"' -mca plm '"rsh"' --tree-spawn

+ docker -H unix:///var/run/docker.sock exec -i -u bob 1e52c4457a18 orted --hnp-topo-sig 0N:1S:4L3:4L2:4L1:4C:4H:x86_64 -mca ess '"env"' -mca orte_ess_jobid '"2792751104"' -mca orte_ess_vpid 2 -mca orte_ess_num_procs '"3"' -mca orte_hnp_uri '"2792751104.0;tcp://172.17.0.6:60697"' --tree-spawn -mca plm_rsh_agent '"/opt/qnib/src/dssh"' -mca plm '"rsh"' --tree-spawn

Process 1 on a7b1e6e98cb1 out of 3

Process 2 on 1e52c4457a18 out of 3

Process 0 on 2ec09afc006e out of 3

[bob@a7b1e6e98cb1 ~]$

TODO (at least)

- local-socket For one I use the local docker socket

/var/run/docker.sock, which is bind-mounted from the single boot2docker instance. Easiest way out would be to run docker SWARM and point it to the SWARM endpoint which will allow to address all containers over one endpoint. - security Yes, it's not [yet] a secure path to constrain user from spawning processes in containers - but please do not bother. Docker DC, a NGINX proxy in front of the docker-socket or something else will figure this out for us.

- ... and more stuff I didn't thought of yet.. :)

One step more

In this little exercise I assumed the containers to be already running. But how about dssh spawns the containers first and then execute the command. Afterwards the containers could be killed.

If the container names determine where Docker SWARM puts the containers - oh boy!

Call to Arms

... rather to the keyboards... I would like the MPI projects to implement this behaviour directly.

I host the Docker Workshop at the ICS 2016 in June. If someone can implement it in a nicer way, I am more then happy to provide 20min to talk about it. :)